Challenge Description

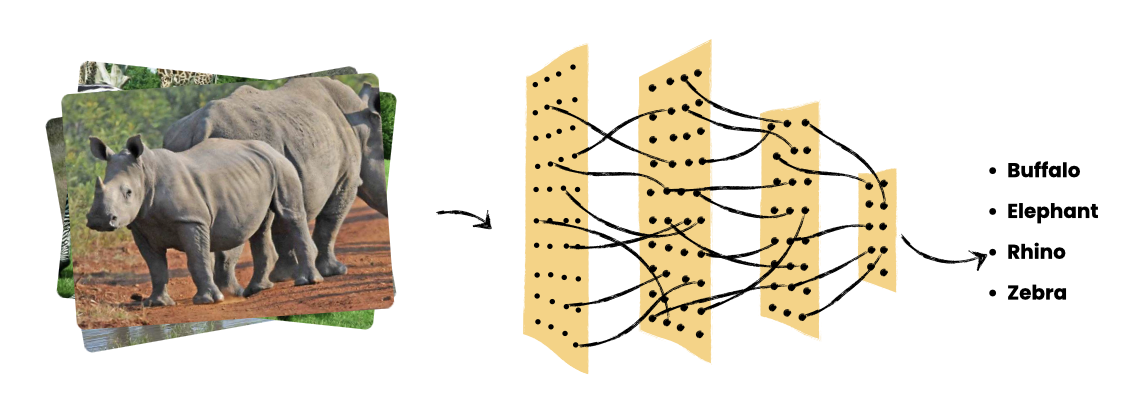

As a participant, you are tasked with developing a machine learning model that can accurately classify images of African wildlife into one of four categories:

- Buffalo

- Elephant

- Rhino

- Zebra

Dataset

The contest provides a dataset split into three parts:

- Training set: 1,049 labeled images (at least 254 per class)

- Validation set: 1,000 labeled images (at least 51 per class)

- Test set: Held-out by instructor for evaluating algorith

All images are 128x128x3 pixels in JPEG format. The dataset includes various lighting conditions, angles, and backgrounds to challenge participants' models. Samples from the dataset are shown below.

Starter Code

Participants can use the following starter code and data to begin their projects:

Rules

- No teams allowed - individual effort.

- Any machine learning approach permitted (CNNs, Transformers, etc.)

- No external datasets allowed

- Pre-trained models are allowed but must be declared

CNN Architectures for Image Classification

Below are pre-trained CNN architectures ordered by their Colab-Friendly Performance (Minimal Resources):

MobileNet (MobileNetV2/V3):- Extremely lightweight and designed for mobile devices.

- Trains very quickly and requires minimal resources.

- Popular for applications where efficiency is crucial.

- Good baseline model.

EfficientNet (EfficientNetB0, B1):- Balances accuracy and efficiency.

- EfficientNetB0 and B1 are relatively small and can be trained on Colab without excessive resource usage.

- Very popular, and state of the art results.

InceptionV3:- More complex than MobileNet, but still reasonably efficient.

- Uses inception modules to capture features at different scales.

- Good balance of accuracy and resource usage.

ResNet (ResNet50):- A good compromise between accuracy and resource usage.

- ResNet50 is a common choice for transfer learning.

- Very popular, and good results.

Xception:- Generally more resource intensive than the previous models, but still usable on colab.

- Performs well on many complex image classification tasks.

VGG16:- More resource-intensive due to its depth and fully connected layers.

- Can be challenging to train on Colab with limited resources.

- Very simple to understand architecture.

Evaluation Metric

The primary evaluation metric for this contest is Top-1 Accuracy. Top-1 accuracy measures the percentage of test images for which the model's top prediction matches the ground truth label. In simpler terms, it's how often the model correctly predicts the single most likely class for each image. This is a standard and intuitive metric for evaluating the performance of multi-class classification models in computer vision.

To submit your model for evaluation:

- Prepare your model file (saved your model when training)

- Create a README file with:

- Your name, index, and department

- Brief description of your approach

- Any special instructions for running your code

- Create a Google Drive folder and make sure it is shared with uojdeeplearning@gmail.com

- Put all your project files in Google Driver you shared above (code, saved model, readme file)

Evaluation Schedule

Submissions will be evaluated weekly. Results will be posted on the leaderboard by Monday at noon.