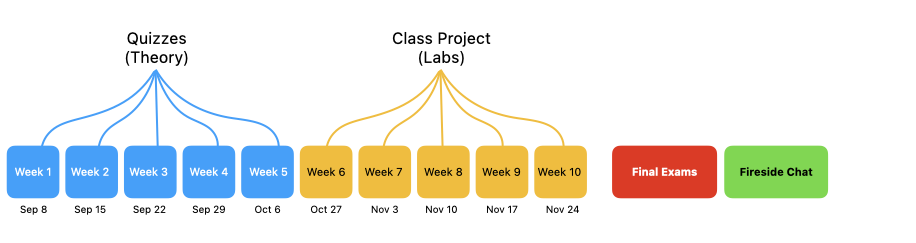

Week 8

November 10 - 14, 2025Exam review

This lecture provides a prep for the final exams.

Week 7

November 3 - 7, 2025Metrics, Generative Models, Transfer Learning, and other architectures.

This lecture provides a comprehensive overview of advanced topics in Deep Learning. We will first explore essential Deep Learning Metrics such as accuracy, precision, recall and how to use confusion matrix to effectively evaluate model performance for specific objectives. Next, we'll delve into Generative Models, examining the principles behind creating new data, focusing on models like Generative Adversarial Networks (GANs). The session will then cover Transfer Learning, a powerful paradigm for leveraging pre-trained knowledge to accelerate training and improve performance on new tasks, including the technique of fine-tuning. Finally, we'll introduce other crucial deep learning architectures: Transformers (foundational for modern NLP), Autoencoders (for unsupervised learning and dimensionality reduction), and Graph Neural Networks (GNNs) (for data structured as graphs), providing insight into their core mechanisms and applications.

Week 6

October 27 - October 31, 2025CNN: receptive fields, applications, and face classification project.

Continuing our deep learning journey, this lecture will introduce Convolutional Neural Networks (CNNs), a specialized type of neural network designed to process data with a grid-like topology, such as images. While traditional neural networks treat every pixel as an independent input, CNNs use a series of filters, or "kernels," to automatically and adaptively learn spatial hierarchies of features, from simple edges and textures to complex objects. We'll explore the core components—convolutional layers, pooling layers, and fully connected layers—and see how they leverage concepts like parameter sharing and sparse connectivity to make image analysis more efficient and accurate than with standard neural networks.

Week 5

October 6 - October 10, 2025Convolutional Neural Networks

Continuing our deep learning journey, this lecture will introduce Convolutional Neural Networks (CNNs), a specialized type of neural network designed to process data with a grid-like topology, such as images. While traditional neural networks treat every pixel as an independent input, CNNs use a series of filters, or "kernels," to automatically and adaptively learn spatial hierarchies of features, from simple edges and textures to complex objects. We'll explore the core components—convolutional layers, pooling layers, and fully connected layers—and see how they leverage concepts like parameter sharing and sparse connectivity to make image analysis more efficient and accurate than with standard neural networks.

Week 4

September 29 - October 3rd, 2025Convolutional Neural Networks

Continuing our deep learning journey, this lecture will introduce Convolutional Neural Networks (CNNs), a specialized type of neural network designed to process data with a grid-like topology, such as images. While traditional neural networks treat every pixel as an independent input, CNNs use a series of filters, or "kernels," to automatically and adaptively learn spatial hierarchies of features, from simple edges and textures to complex objects. We'll explore the core components—convolutional layers, pooling layers, and fully connected layers—and see how they leverage concepts like parameter sharing and sparse connectivity to make image analysis more efficient and accurate than with standard neural networks.

Week 3

September 22 - 26, 2025Training and Optimizing a Neural Network

This week's lecture will delve into the mechanisms behind how a neural network learns. We'll start with backpropagation, the core algorithm that allows a network to "learn" from its errors. It works by propagating the error from the output layer backward through the network, calculating how much each neuron's weights contributed to that error. This process is essentially a fancy application of the chain rule from calculus. Next, we'll connect this to gradient descent, the optimization algorithm that uses the error information from backpropagation to adjust the weights and biases of the network. Think of gradient descent as guiding the network down a hill to the lowest point of error, with backpropagation showing it which way is "down." Finally, we will discuss overfitting, a common problem where a model learns the training data too well, memorizing noise and specific examples rather than generalizing to new data. We'll explore strategies to combat overfitting and ensure our models are robust and effective.

Week 2

September 15 - 19, 2025Biological and Artificial Neural Networks

This week, we're diving deep into the fascinating world of deep neural networks, the very foundation of modern AI. Our journey begins with an exploration of the biological neural networks that inspired this technology, providing essential context for understanding how these powerful systems work. We'll then break down the core components, starting with the perceptron, and progressively build up to more complex neural networks. You'll gain a solid grasp of how data flows through these networks via forward propagation and, crucially, how we measure their performance by computing the loss of a deep neural network. Get ready to build a strong theoretical foundation for the hands-on labs to come!.

Week 1

September 8 - 12, 2025Introduction and Logistics

In the lecture portion of Week 1, we will provide a comprehensive overview of deep learning, including its fundamental concepts, applications, and the role of neural networks. We will also discuss the TensorFlow/Keras framework, which will be the primary tool used throughout the course. In the lab session, students will set up their development environment using Google Colab, experiment with a neural network, and familiarize themselves with the TensorFlow/Keras API.